With the rapid development of intelligent transportation, end-to-end technology has become the core direction for the advancement of driver assistance systems. This article outlines the development history of driver assistance, from the 2.0 era of 2D perception to the current 3.0 era of end-to-end technology, clearly presenting the technical features and evolution logic of different stages. It delves into the foundational large models that support end-to-end driver assistance, including core principles and application scenarios of large language models, video generation models, and simulation rendering technologies. Furthermore, it elaborates on the network architecture, technical support, and training paradigms of end-to-end driver assistance, providing a comprehensive and in-depth perspective on this cutting-edge technology.

1. Development History of Driver Assistance Systems

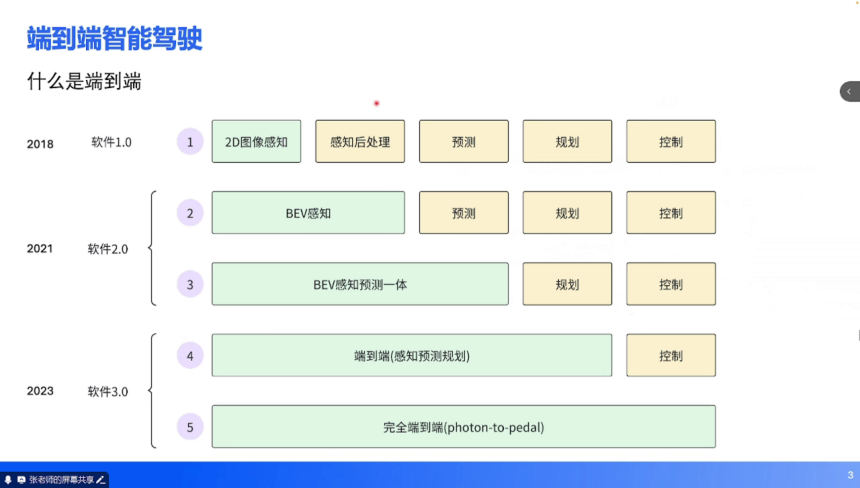

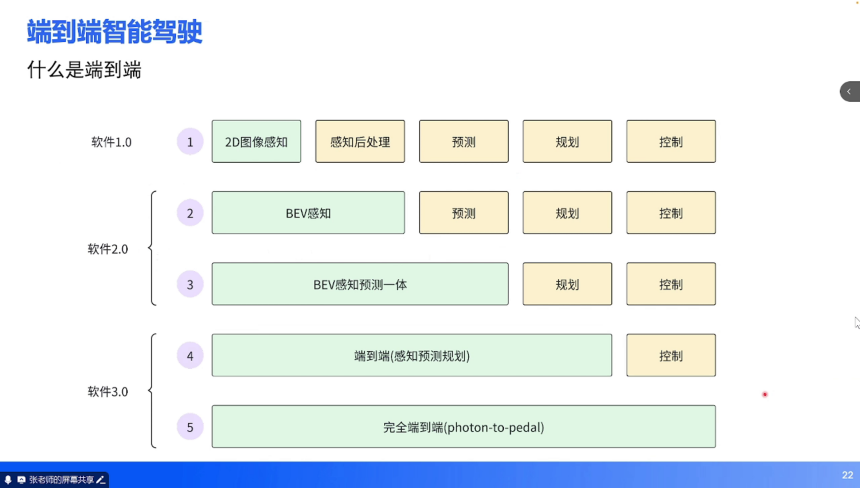

(1) Definition and Evolution Logic of End-to-End Technology: The development of driver assistance can be seen as a process of continuous iteration of technical paradigms, with end-to-end technology marking the latest stage of this evolution. Industry practice divides the development of driver assistance into five stages, corresponding to the technological leap from software 1.0 to software 3.0:

- Software 1.0 Stage (around 2018): Characterized by the combination of 2D image perception and traditional algorithms. Neural networks were responsible only for the detection and segmentation of 2D images, while subsequent perception post-processing, prediction, planning, and control relied on traditional logic coded in C++.

- Software 2.0 Stage (around 2021): The rise of BEV (Bird's Eye View) perception technology. Neural networks achieved a transition from 2D to 3D space, integrating multi-camera surround view information into BEV space, although prediction, planning, and control still relied on traditional algorithms. Towards the end of this stage, a transition to "BEV perception + unified prediction" emerged, further enhancing environmental understanding.

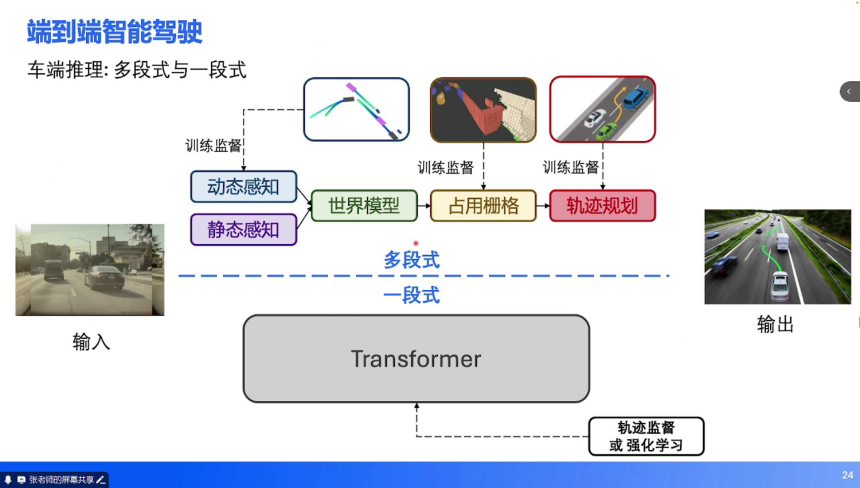

- Software 3.0 Stage (2023 to present): End-to-end technology has become mainstream, where the input is an image, and the neural network directly outputs the desired driving trajectory (covering perception, prediction, and planning), leaving only the control phase to traditional algorithms.

- Future Trends: A fully end-to-end system (Photon-to-Pedal), meaning a fully neural network-driven chain from sensor photon input to pedal control output, is still in the exploratory stage.

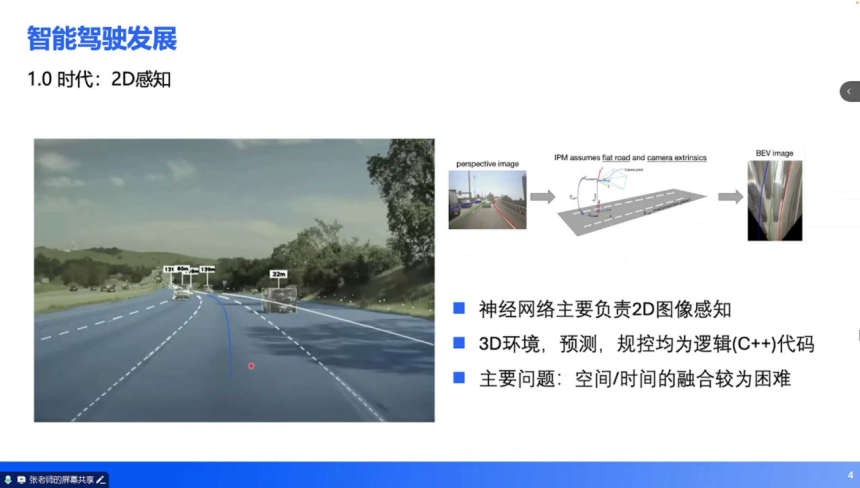

(2) Characteristics and Bottlenecks of 2D Perception in the 1.0 Era: From 2018 to 2019, deep learning matured in 2D visual tasks, pushing driver assistance into the 1.0 era. The core technology of this stage was 2D image perception, characterized by:

- Technical Implementation: Neural networks received 2D image inputs, outputting 2D detection boxes (vehicles, pedestrians), lane lines, and driveable area segmentation. To achieve 3D spatial driving, traditional algorithms (C++) were required for post-processing, projecting 2D information into 3D space.

- Core Bottlenecks: Difficulties in spatial fusion, lack of temporal fusion, and absence of data feedback loops hindered overall performance optimization.

(3) Breakthroughs and Limitations of BEV Perception in the 2.0 Era: The rise of the Transformer architecture in 2021 ushered driver assistance into the 2.0 era, making BEV perception the core technology.

- Technological Innovations: The Transformer architecture could directly convert multi-camera 2D image inputs into 3D features in BEV space. Later versions integrated prediction tasks and introduced occupancy networks, enhancing the understanding of complex scenes.

- Capability Boundaries: Supported high-speed NOA (Navigation Assisted Driving) and certain urban scene functions, achieving usable, but requiring takeover, effects.

- Core Limitations: Control inputs were limited to structured information, lacking complex semantics, which diminished handling capabilities in intricate scenarios.

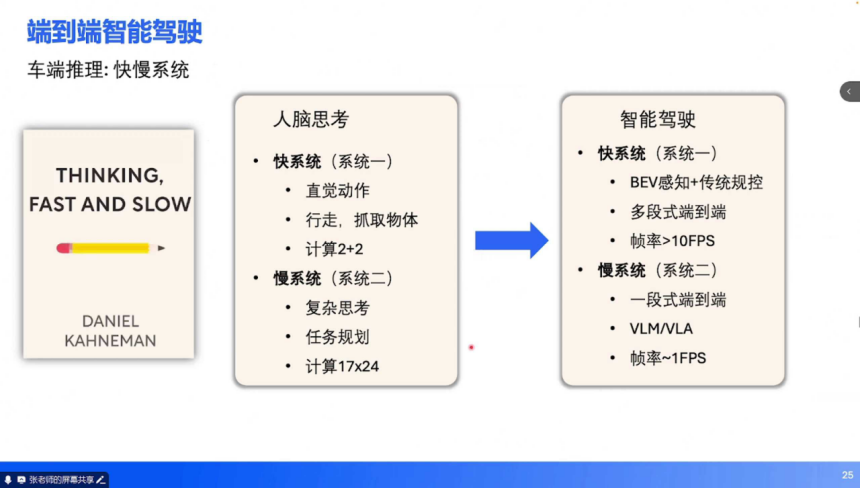

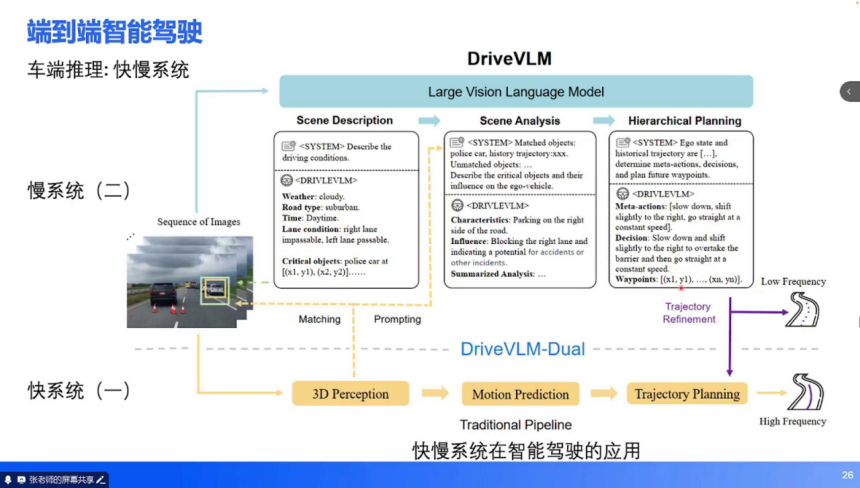

(4) Capability Upgrades and Challenges of End-to-End Technology in the 3.0 Era: Since 2023, the integration of large model technologies with driver assistance has pushed the maturity of end-to-end technology.

- Core Capabilities: Universal obstacle understanding, long-distance navigation integration, complex road structure analysis, and human-like trajectory planning.

- Technical Challenges: Performance fluctuations and contradictions in safety redundancies remain engineering puzzles.

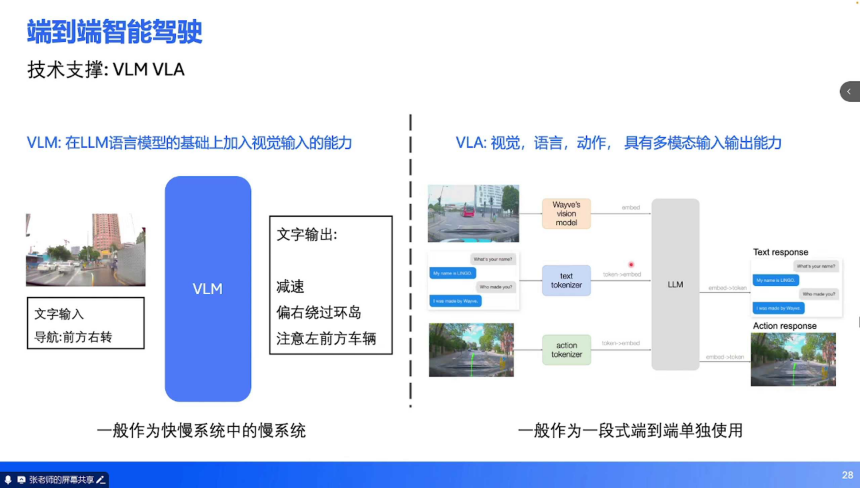

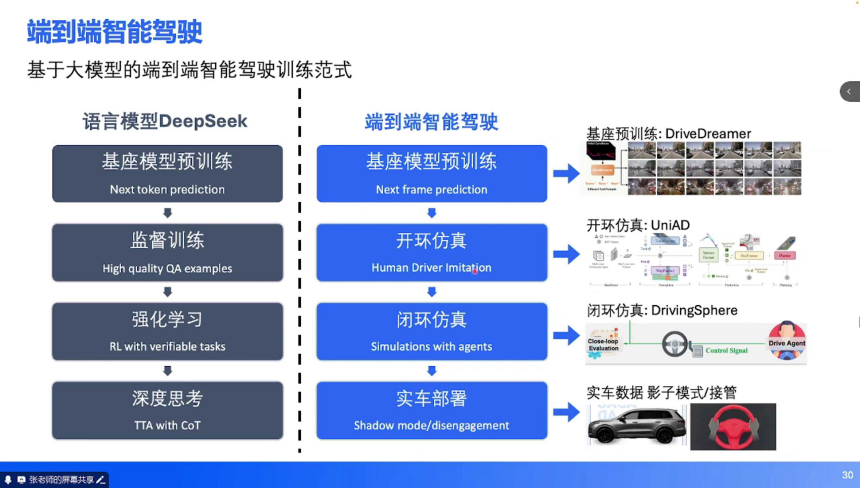

2. Foundation of Large Models: Underlying Support for End-to-End Technology: The maturity of end-to-end driver assistance depends on three types of large model technologies: large language models (LLM), video generation models (Diffusion), and simulation rendering technologies (NeRF/3DGS). Together, they build a full-chain capability for perception, decision-making, and validation.

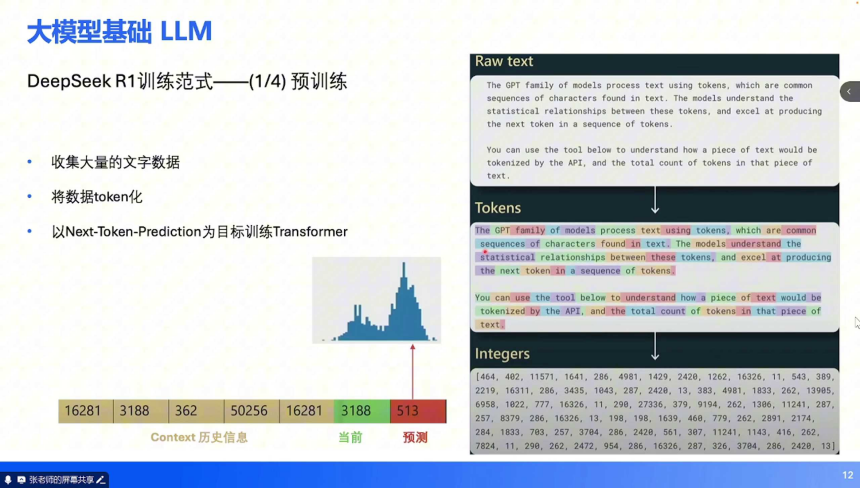

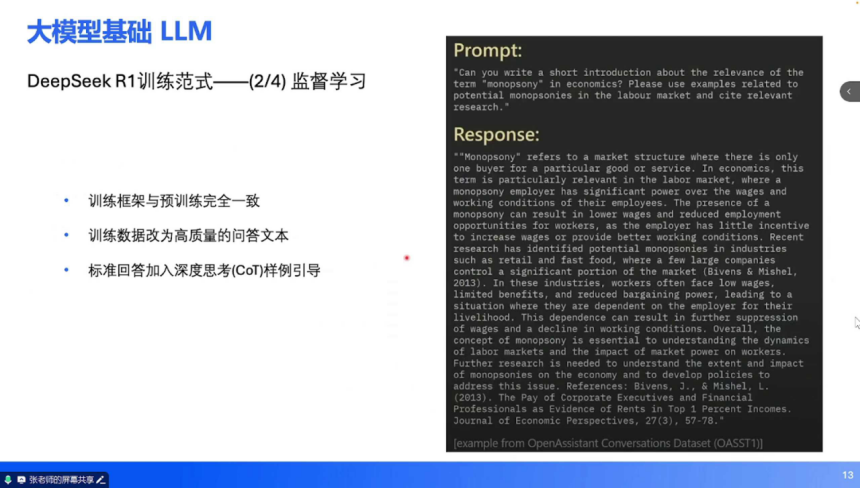

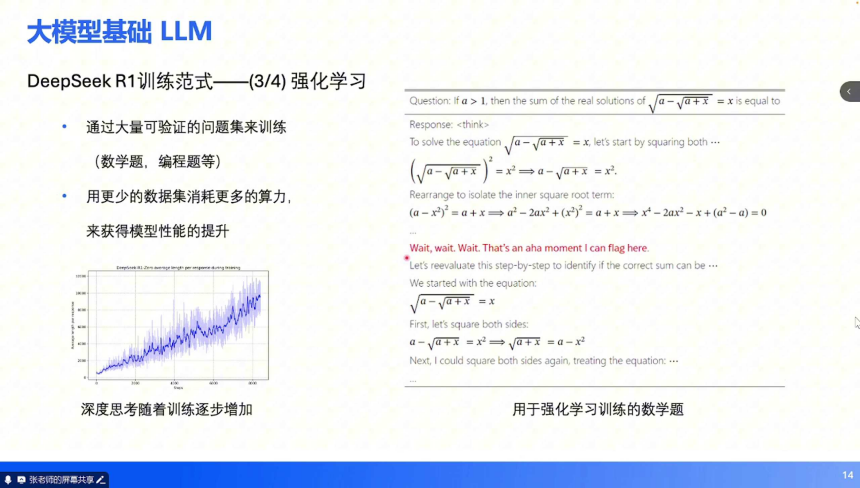

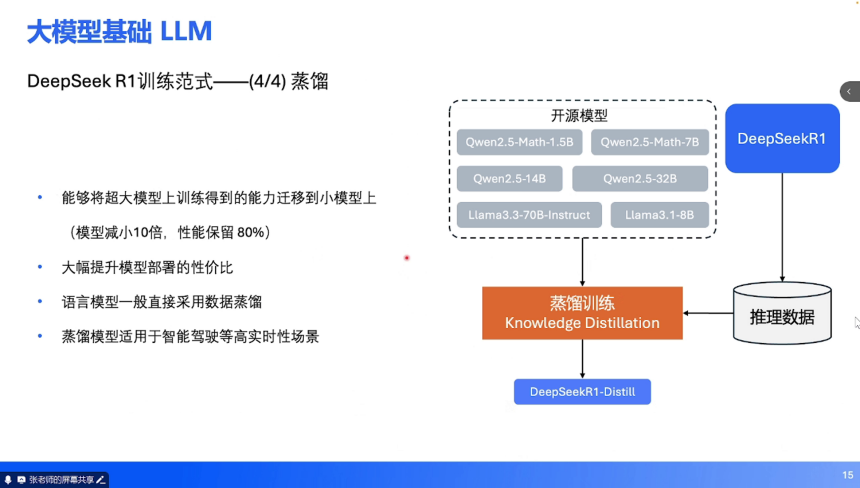

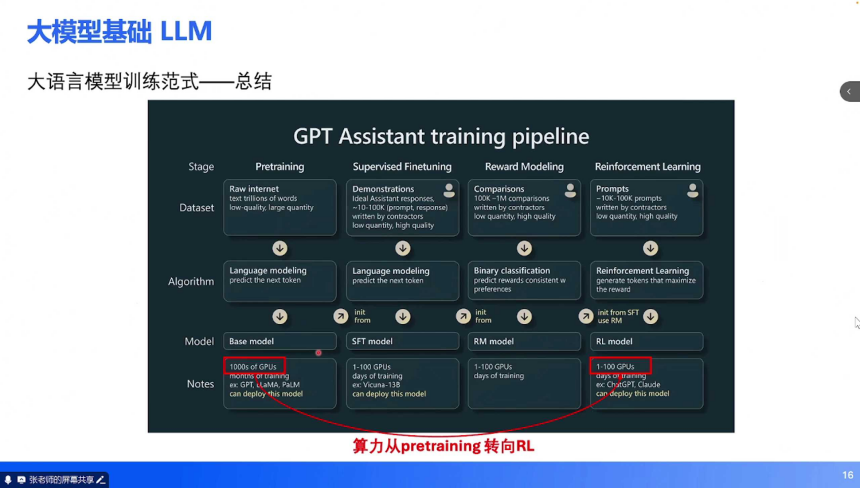

(1) Large Language Models (LLM): The training logic of large language models provides core methodologies for end-to-end driver assistance.

- Training Paradigms: Comprising four stages including pre-training, supervised fine-tuning, reinforcement learning, and distillation, which balance data use and model performance.

...

End-to-end driver assistance represents a typical application of large model technologies in embodied intelligence. Its development path reflects the technological trends of moving from discrete to integrated systems, and from rule-driven to data-driven approaches. Currently, the industry faces three major challenges: suppressing model hallucinations, aligning the virtual and real worlds, and coordinating decisions among multiple safety systems. However, with the improvement of large model capabilities and the maturation of simulation technologies, the realization of fully end-to-end systems (Photon-to-Pedal) is no longer far off.

The Evolution of Advanced Driver Assistance Systems: From 2D Perception to End-to-End Technology

Images

Share this post on: