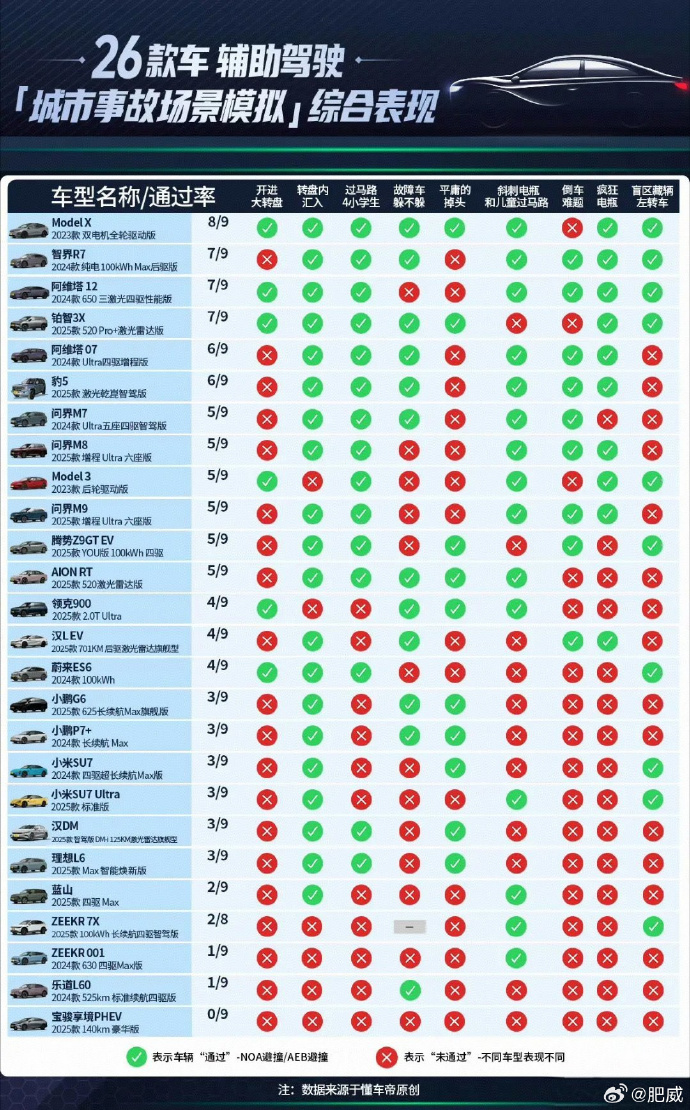

Dongchedi has conducted a comprehensive test of 36 mainstream new energy vehicles on closed highways and city roads, simulating 15 high-risk scenarios. The results have sparked widespread discussions across various platforms. In summary: 1. No vehicle achieved 'zero errors' across all tests; 2. Only a few models, including the AITO M9, XPeng G6, and Weipai Lanshan, passed more than half of the projects on the highway, while a staggering 15 models failed all six highway scenarios; 3. Tesla's performance was surprisingly strong, with the Model X only failing one scenario, making it the biggest winner in public opinion. Topics such as 'domestic intelligent driving completely failed,' 'is assisted driving a scam?', and 'it's 2025, and there's not a single good autonomous driving system' quickly trended online. Even Elon Musk from across the ocean took notice and liked the test results; domestic manufacturers mostly remained silent or declined to comment. The debate in public opinion is far more intense than in the testing environment. This article aims to clarify two core issues: 1. Are there issues with Dongchedi's testing process, and are there loopholes in the rules? 2. Do Dongchedi's test results have reference value, and what significance do they have for the industry and consumers?

To start, let's introduce the specific process of Dongchedi's testing. They rented real highways and city roads to cover nearly 40 mainstream models from over 20 brands, including Tesla, Hongmeng Zhixing, Xiaomi, and Li Auto. The highway scenarios included six extreme situations like 'disappearing front car,' 'night construction zone obstacle avoidance,' and 'wild boar crossing.' The city scenarios featured nine high-frequency danger situations such as 'merging in a roundabout' and 'children suddenly crossing.' The most noteworthy and widely circulated scenario was the 'disappearing front car,' where vehicles traveling at 130 km/h faced a suddenly changing lane, exposing a stationary accident vehicle ahead. Among the 36 models, only five successfully completed the avoidance, resulting in a dismal 14% pass rate, with over 70% colliding with the accident vehicle, and some models nearly causing secondary accidents due to forced lane changes. However, not all tests were as disastrous; only the Tesla Model X managed to barely pass the 'wild boar crossing' scenario, while the rest failed completely. If one watches the entire test, it indeed creates the perception that Dongchedi has unveiled the 'emperor's new clothes' of assisted driving.

As the largest automotive media platform in China, Dongchedi's willingness to invest substantial human and financial resources in such a comprehensive test of nearly all popular models is commendable. It fulfills the responsibility of a leading media outlet, allowing readers to intuitively understand the risks faced by different models in these extreme scenarios. Moreover, their scenario settings were quite thoughtful; except for a few scenarios that deviated from reality, most aligned with actual traffic risks distilled from real accident data, representing 'high-risk, low-probability' scenarios. These are precisely the 'death traps' that drivers fear most in everyday driving, where assisted driving systems are likely to fail. Exposing these issues to the public is certainly beneficial for encouraging automakers to confront their technical shortcomings.

However, there were many aspects of Dongchedi's testing that lacked rigor. The most controversial was the control variables. Many of the control variables did not align with real-world situations, such as the significant differences in following distances. Dongchedi set all following distances to a medium level, but different vehicles have different following strategies, resulting in varying distances to the obstacle vehicle, which impacts braking response time and ultimately determines whether the system has enough time to successfully avoid hazards. Additionally, in the 'disappearing front car' scenario, Dongchedi set all vehicles' assisted driving speeds to the speed limit plus 10%, leading some models to accelerate to 130 km/h while others were capped at 120 km/h. This discrepancy could lead to vastly different outcomes for two vehicles braking at the same point, undermining the fairness of horizontal comparisons. Furthermore, in these high-risk scenarios, there were no clear standards for the number of test attempts per model. Given that assisted driving systems are influenced by the instantaneous state of sensors and subtle differences in algorithmic decisions, relying on a single test success or failure is inadequate to represent a model's stability in that scenario, significantly diminishing the statistical significance of rankings and 'pass rates.' Especially in scenarios like 'disappearing front car,' where a rabbit car (lead car) is required to perform sudden lane changes, ensuring the rabbit car's speed, timing, and trajectory accuracy is critical for the tested vehicle's response. Video evidence indicates that the rabbit car's speed and maneuvering were inconsistent, greatly affecting the following vehicle's actual speed. Therefore, without clear standards for the number of tests, the public's summarization of results into a 'pass rate' ranking appears somewhat hasty and unrefined.

Most astonishingly, during such high-speed testing, multiple staff members and filming equipment were placed in the right emergency lane, demonstrating a serious lack of safety awareness. In a regular testing facility, this would likely result in being banned from testing. Moreover, the presence of personnel on the right significantly impacted the test results. Vehicles equipped with the EAES function from Hongmeng Zhixing and those with AES functions from NIO and Li Auto can find suitable safe spaces to avoid when the front vehicle changes lanes; if the right emergency lane is clear, they could safely veer right. This indicates that Dongchedi indeed had many lapses and unreasonable rules in this test, which objectively affected the final data and scores.

In conclusion, as media outlets conducting 'testing,' we understand the difficulties in planning a large-scale test. However, the fairness and objectivity of results from a non-transparent and non-public process can easily be called into question. Hence, 'openness' is key to breaking such doubts. We urge Dongchedi to establish an open, transparent, and multi-participant testing ecological alliance in future large-scale tests, where we can also join in and provide more professional testing services. Together, we can ensure that every piece of data is open and transparent, enhancing the credibility of the tests through checks and balances. Ultimately, the goal of testing must be to provide consumers with real and valuable information, aiding them in making informed purchasing decisions. All process designs, standards, and result announcements should prioritize this goal as the highest principle.

Testing Reveals Weaknesses in Chinese Autonomous Driving Systems

Images

Share this post on: