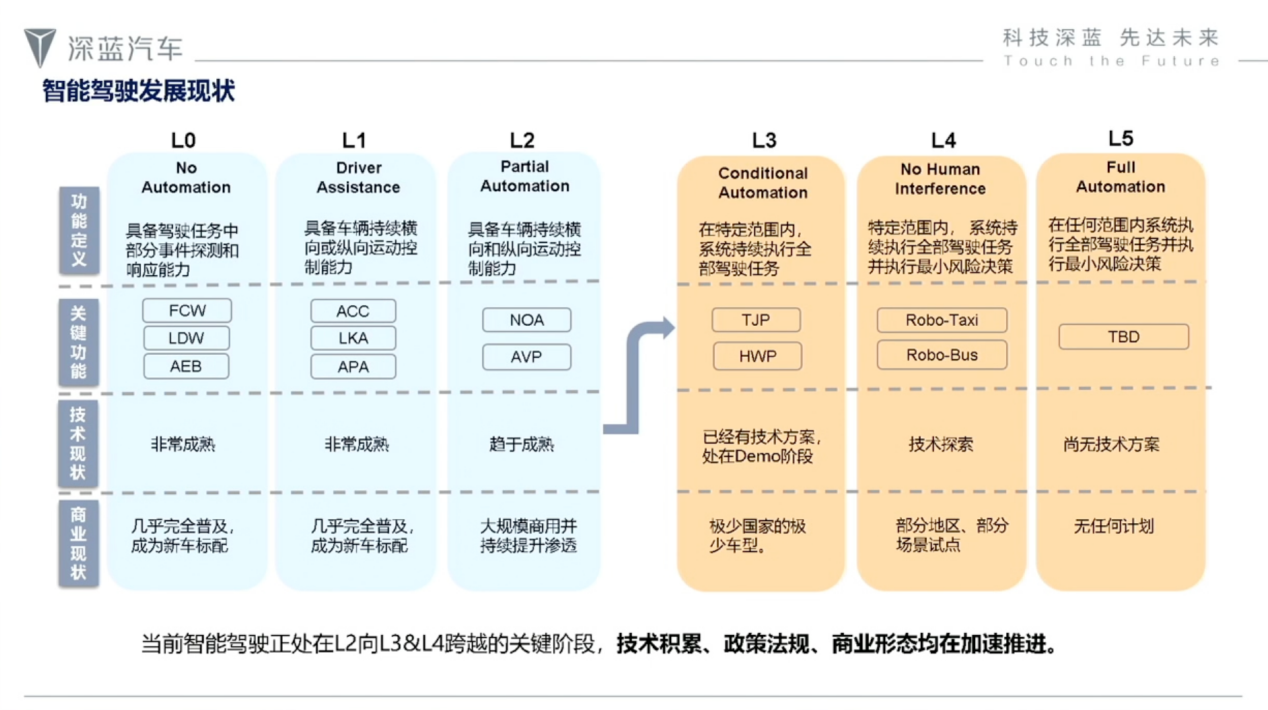

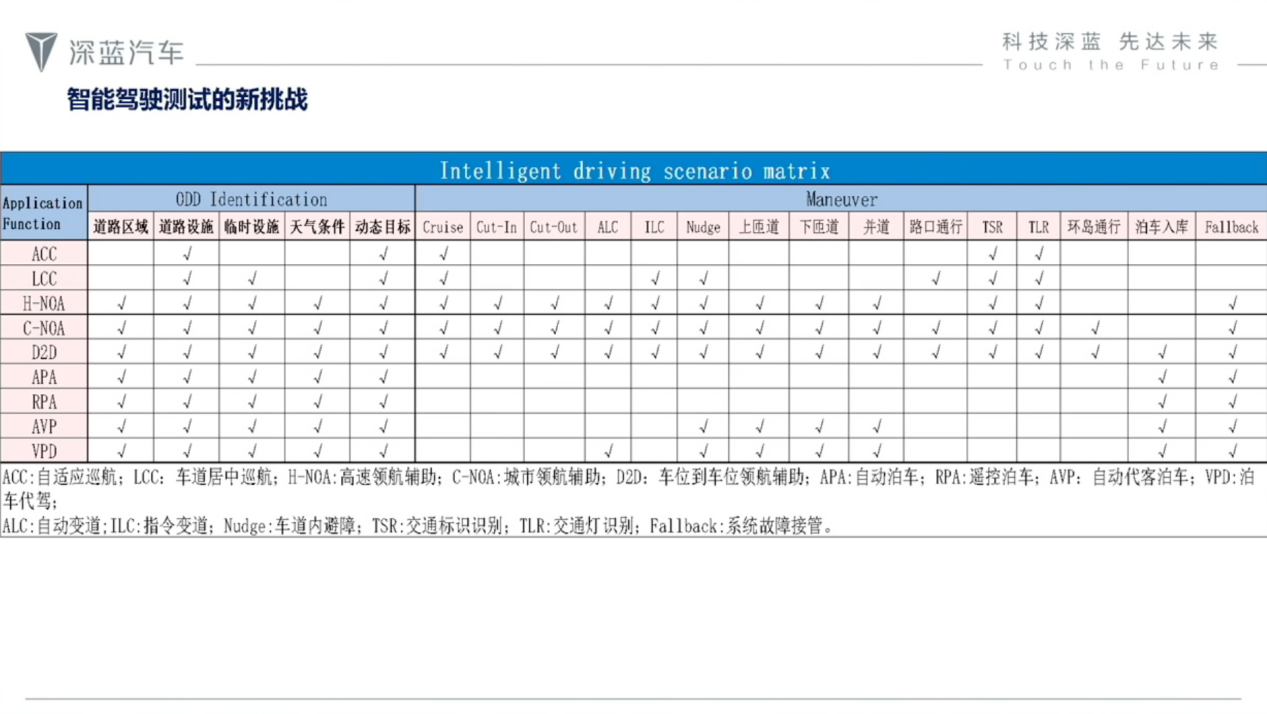

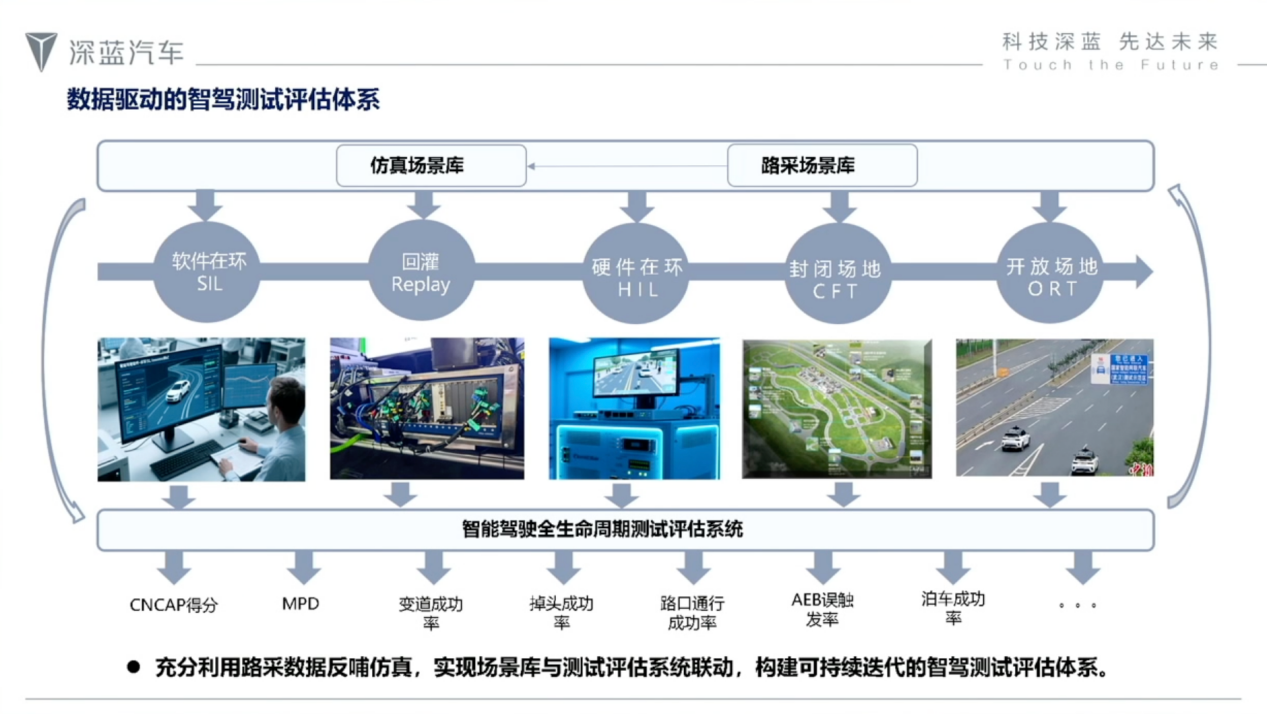

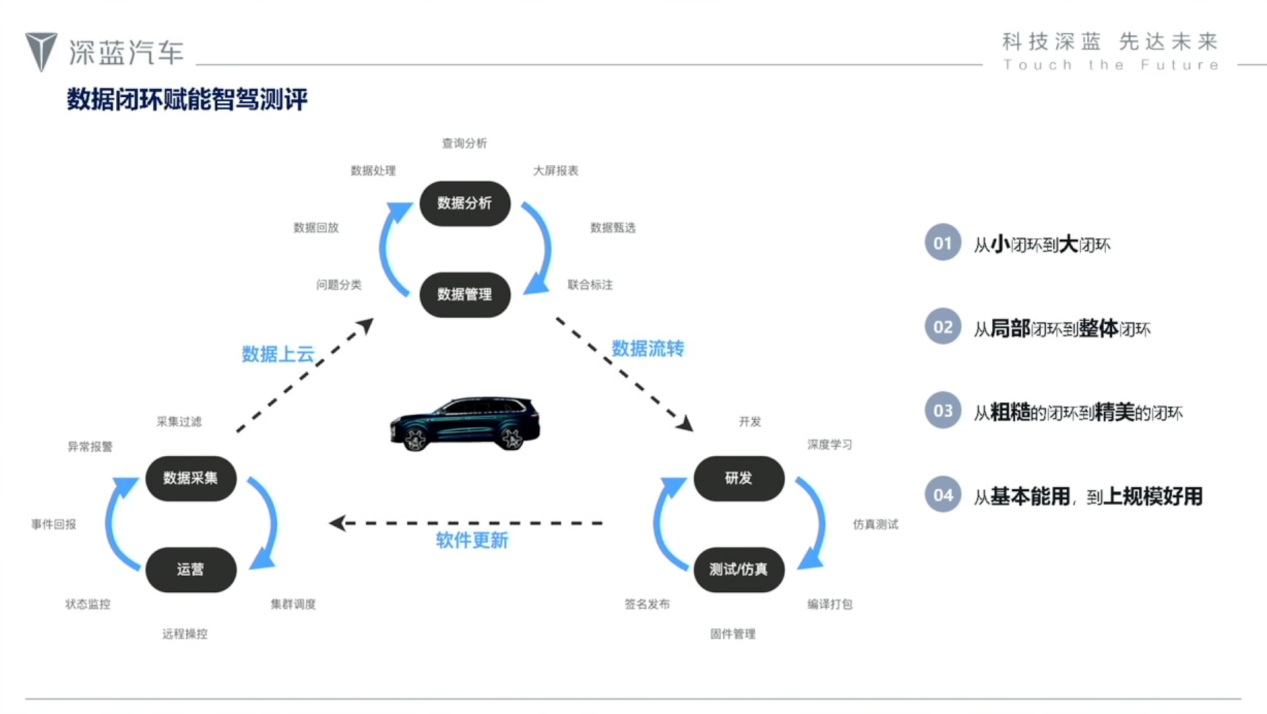

On July 22, 2025, at the 8th Intelligent Assisted Driving Conference, Wen Xie, Deputy Chief Engineer of Assisted Driving Testing at Deep Blue Automotive Technology Co., Ltd., pointed out that intelligent assisted driving technology is currently approaching the critical stage of L3 level. Although regulations have not yet been established, many companies are actively preparing. The current system design is leaning towards multiple redundancies, with backups in intelligent assisted driving controllers, chassis, steering, and power supply, significantly increasing the testing workload. Additionally, functional safety and expected functional safety need to be productized at the L3 stage. He noted that testing faces challenges such as complexity of scenarios, extended testing cycles, and rising costs, making traditional testing methods inadequate. To tackle these challenges, Wen proposed building a data-driven testing and evaluation system for intelligent assisted driving. This system should emphasize the feedback of road data to the simulation system, achieving effective linkage between the scenario library and evaluation system. Furthermore, it should integrate data collection and testing, utilize cloud-based data analysis, and leverage technologies such as neural networks to achieve scenario virtualization, constructing a high-confidence and high-coverage testing evaluation system. Currently, intelligent assisted driving is at a critical transition from L2 to L3 levels. Despite the absence of relevant regulations, numerous intelligent assisted driving solution providers have begun to advance their work. It is anticipated that a large number of L3 level intelligent assisted driving systems will be put into use in the next one to two years. The field of intelligent assisted driving is currently accelerating in terms of technological accumulation, policy formulation, and exploration of business models. As testers, we cannot wait until L3 intelligent assisted driving systems are widely applied to begin our relevant work, but should proactively establish a more robust testing evaluation system. From the development trends of existing intelligent assisted driving solutions, systems are evolving towards multiple chips and controllers, with multiple redundancy designs in power chassis and supply systems, which will greatly increase our testing workload. Another key challenge is the functional safety and expected functional safety. In the L1 and L2 stages, functional safety and expected functional safety were primarily discussed at the process level. However, in the L3 stage, these must be practically applied to products, leading to increased testing difficulty and workload. L2 level intelligent assisted driving systems can handle over 95% of scenarios; the remaining 5% require manual takeover. In the L3 stage, we will need to invest 95% of our efforts to cover this remaining 5% of scenarios. Looking at the distribution of scenarios, I set it as a three-dimensional matrix, with the horizontal axis representing maneuverability, covering operations such as cruising, lane changes, and merging; the vertical axis representing various intelligent assisted driving scenarios perceived by users, including ACC, LCC, NOA, and AVP, with ODD factors such as road structure and weather conditions added. Currently common testing methods include SIL, Replay, HIL testing, as well as closed and open road tests. To address the complexity and increasing number of scenarios of L3 intelligent assisted driving systems, relying solely on existing testing methods is insufficient. I believe we need to fully utilize data for systematic optimization. First, we need to establish a link between road collection data and simulation data, making full use of real vehicle collected scenarios to enrich and improve simulation scenarios, feeding back real vehicle tests to simulation tests. Secondly, a full lifecycle testing evaluation system should be constructed, setting evaluation indicators for intelligent assisted driving systems at the module, system, and vehicle levels at different product development stages. Thirdly, a linkage mechanism between the scenario library and the evaluation system should be established. The accumulation of the scenario library is sometimes blind and needs to be supplemented and improved based on testing evaluation results. Furthermore, continuously exploring and iterating scenarios is crucial. As the number of real vehicles increases, most scenarios will become low-value repetitive scenarios. An effective mechanism needs to be established to fully explore high-value corner cases. Regarding data utilization, we cannot separate the data closed loop from the testing closed loop and should not overlook the potential value of data infrastructure construction, but rather construct a testing system from multiple dimensions. Firstly, data collection can start with a small closed loop involving collection and operation. At this stage, we can integrate data collection vehicles and testing vehicles into the same architecture without separately building so-called data collection vehicles. Secondly, data analysis should be migrated to the cloud for data playback, classification, and organization to build a collected scenario library. Thirdly, focusing on testing itself, we can execute using data feedback or simulation testing methods. But how do we establish the connection between data and simulation? The key lies in achieving a closed-loop virtualization, which is currently a hot topic in the industry. We plan to use technologies such as 3D Gaussian to achieve this goal. Our current thinking is to fully exploit the value of real vehicle data, transforming these data into digital assets that serve virtual simulation. The rough idea can be divided into several steps: first, conduct multiple data collections on specific roads; second, classify and label the collected data using automated labeling tools; third, establish high-confidence static scenarios using methods like 3D Gaussian; finally, configure dynamic traffic flow and sensor models using high-real-time simulation engines. After completing these steps, the entire data-driven simulation system can be considered established. In terms of the testing evaluation system, we assess from module-level, system-level, and vehicle-level dimensions. Under the rule-based development approach, module-level evaluation is highly effective. For example, when evaluating the perception module, we separately assess aspects such as traffic participant targets, traffic lights, and traffic signs, while the planning control module is also tested separately. With rapid technological iteration, various new technologies such as end-to-end, VLA, and world models continue to emerge. During the evolution of technology, the existing module-level evaluation methods will no longer be applicable. If a two-part end-to-end architecture is adopted, where the intermediate interface is explicitly expressed, evaluable interfaces are still retained; however, a one-part end-to-end system has no explicit interfaces and cannot be evaluated separately. The system-level evaluation is similar to the vehicle-level evaluation but is more comprehensive, usually achieved through simulation means. System-level evaluations require higher testing coverage, which is difficult to achieve through real vehicle testing. Real vehicle testing is considered passive testing. For example, to test a target vehicle's lane change scenario, driving a full circle from Jiading in Shanghai to Pudong may not encounter one, and even if it does, the corresponding speed and lane change distance may not be as desired. In contrast, simulation testing is proactive, allowing for the setting of any variables. Of course, the premise is that simulation testing needs to address confidence issues. If based on the aforementioned data-driven high-fidelity scenario generation method, then system-level simulation testing has significant advantages in coverage, efficiency, and cost. Overall, I believe the scenario library is the key link connecting simulation and real vehicle testing, and the two should form a complementary and coordinated relationship. Problems discovered in real vehicle testing can drive optimization of the simulation link, while defects exposed in simulation testing can guide real vehicle testing for targeted design. Furthermore, data should be utilized efficiently, and its value can be fully activated through new technological means. Specifically, technologies such as 3D Gaussian and world models can transform open-loop road collection scenarios into simulation resources for closed-loop testing. This path is particularly crucial for the development of higher-level intelligent assisted driving systems. Additionally, with the evolution of technologies like end-to-end, VLA, and world models, high-confidence simulation systems will exhibit tremendous potential. It is anticipated that in the next one to two years, their application scope will further expand. In developing L3 and even L4 level intelligent driving, it is essential to tackle the remaining 5% long-tail problem. Implementing more efficient and higher-confidence simulation technologies has become a necessary choice and an essential pathway to realizing advanced intelligent driving.

Deep Blue Automotive Highlights Challenges and Strategies for L3 Autonomous Driving Testing

Images

Share this post on: